...Explore our more blogs

When "Too Powerful to Release" Meets "Too Deep to Hide": Deconstructing Adversarial Poetry with Layer-Wise Analysis

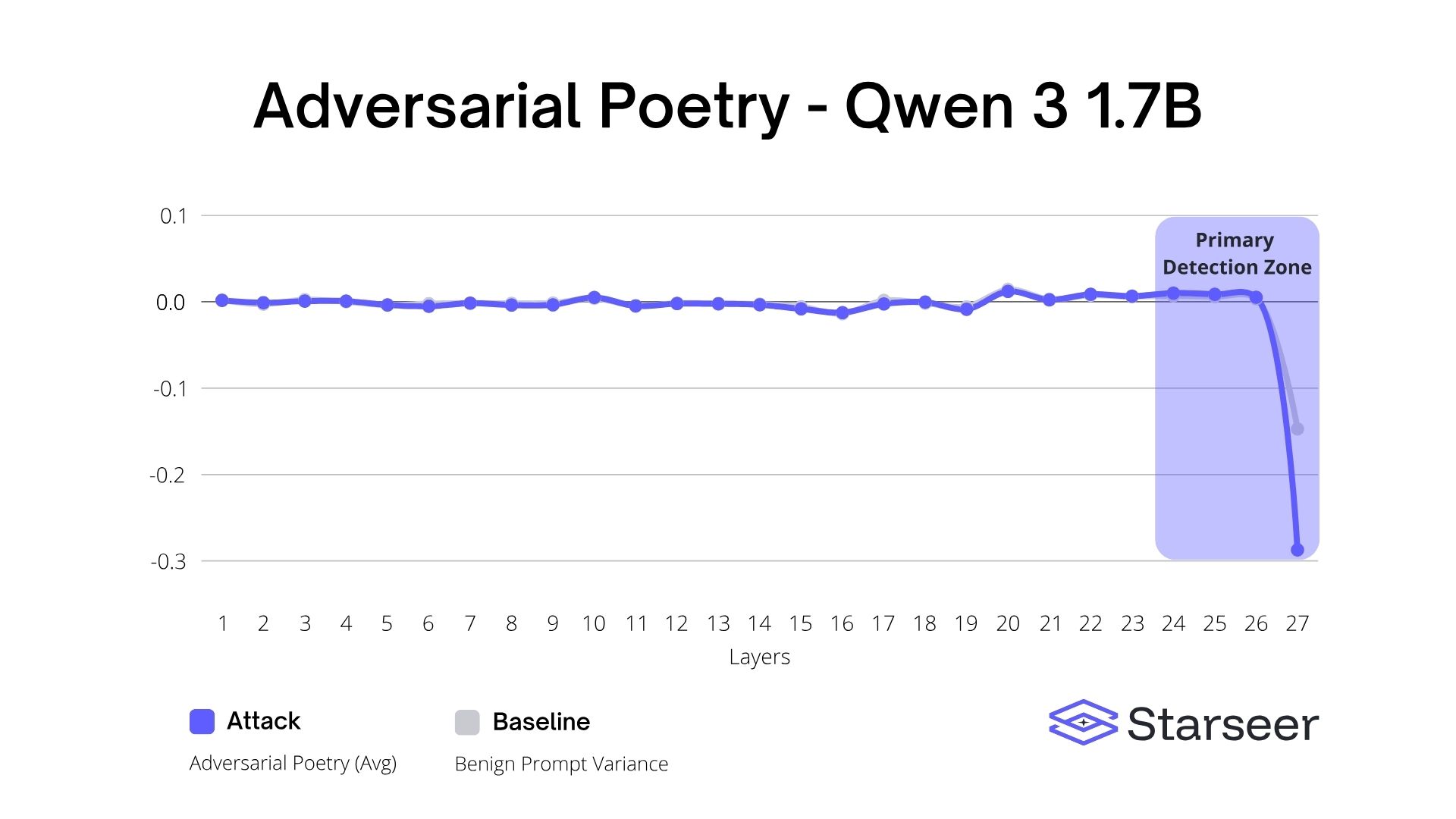

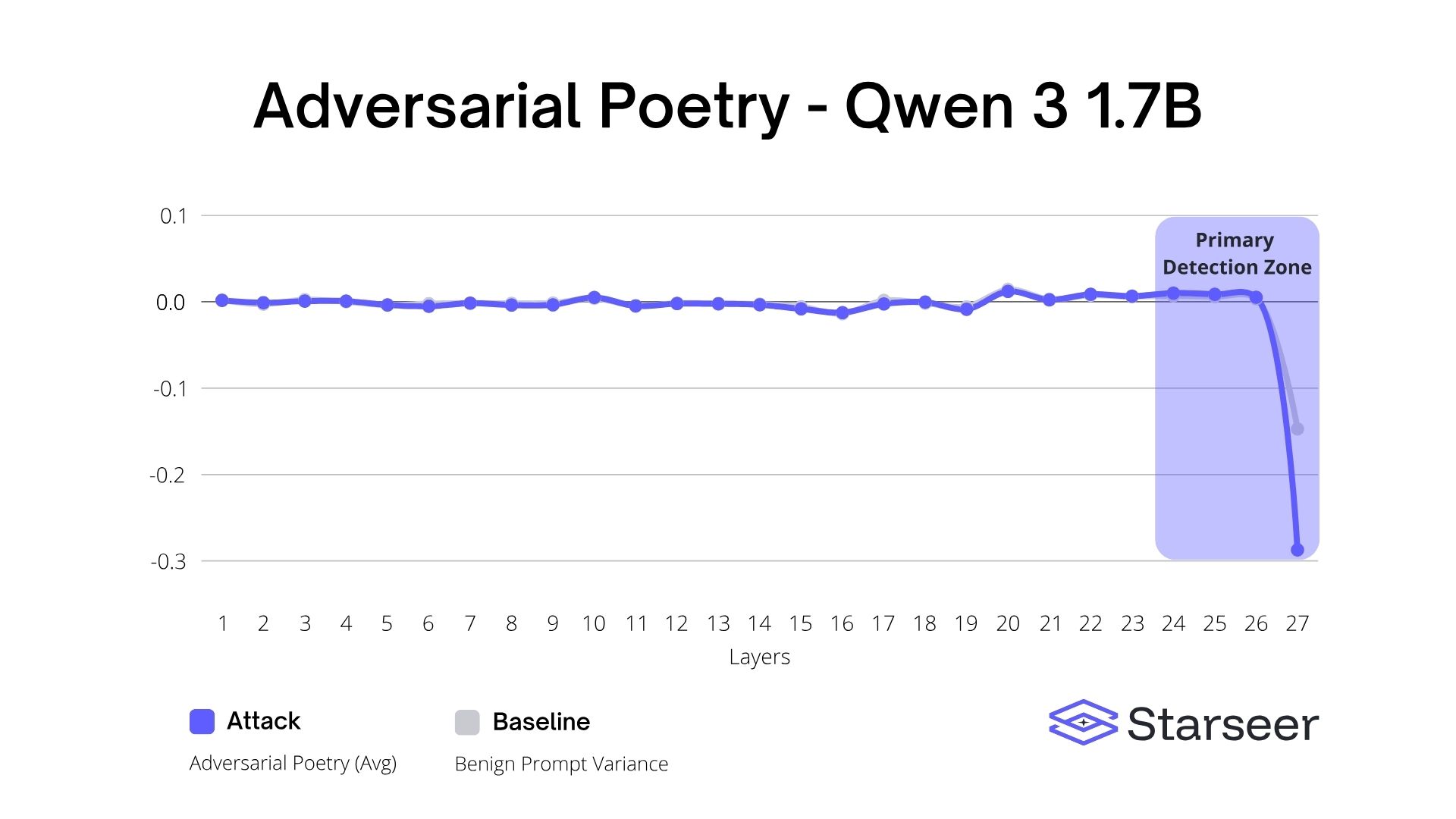

A recent “adversarial poetry” jailbreak claimed to be too dangerous to release. Using Starseer’s interpretability-based analysis, we reconstructed similar prompts, tested them across Llama, Qwen, and Phi models, and uncovered consistent, model-internal anomaly signatures that make detection possible—even without knowing the original attack prompts.

When "Too Powerful to Release" Meets "Too Deep to Hide": Deconstructing Adversarial Poetry with Layer-Wise Analysis

A recent “adversarial poetry” jailbreak claimed to be too dangerous to release. Using Starseer’s interpretability-based analysis, we reconstructed similar prompts, tested them across Llama, Qwen, and Phi models, and uncovered consistent, model-internal anomaly signatures that make detection possible—even without knowing the original attack prompts.

Take control of your

AI model & agent.

From industrial systems to robotics to drones, ensure your AI acts safely, predictably, and at full speed.

%20(Landscape)).avif)